As part of our podcast series, we spoke to Dr Anders Kofod-Petersen, Deputy Director of the Alexandra Institute in Copenhagen, and Professor of Artificial Intelligence at the Norwegian University of Science and Technology. In this interview, he talks about the importance of building AI responsibly, the notion that IT projects should be viewed as organisational projects, the benefits of interdisciplinary work, and more. Anders is talking in the FinTech stream at the 2020 conference.

Can you tell us a bit about your background and career path, and how you got to be where you are now?

That’s a good question! I started out trying to become a chef. That didn’t work, so I opted for Plan B. I like Sci-Fi. So I thought, how can I make Sci-Fi, without being a writer? Then, at some point I stumbled on AI, and I thought it ticks a lot of boxes. It fulfils an engineering need to build something clever; but it equally ticks the science box. Intelligence, whether it’s computational or biological, is a huge wonder. I’ve been doing this for twenty-odd years, and then I ended up here.

You’re the co-director of the Alexandra Institute. Tell us a bit more about your role, and what that involves.

Our main purpose is to take all of this marvelous tech research coming out of universities, and massage it, to make sure that it can flow into companies as fast as possible. Preferably yesterday!

We’re a private company, but we have a rubber stamp from the Ministry of Research. So our main purpose is to make sure that our customers, being primarily Danish companies, make some more money – so they can hire more people, who can pay more in taxes, so I can keep on sending my kids to school for free – that’s basically what we’re supposed to do. And, of course, we should make a profit along the way so we can pay salaries and stuff like that, but that’s kind of the secondary thing.

Our main purpose is to take all of this marvellous tech research coming out of universities, and ensure it can flow into companies as fast as possible. Preferably yesterday! So that’s basically what we do. We solve difficult, cool problems.

So is the Institute unique in Denmark, or are there other institutes that exist?

We are unique in the sense that we are horizontal. So formally, we do not really have any domain knowledge. This is of course not completely true. But formally we don’t. Because you can basically shove computers into everything. So, anything you can dream of putting power to, we play around with. So that’s anything from flying robots, now known as drones, to arty installations where you can chat to pieces of art, to churn prediction in financial institutes. If you can think about it, we can probably put some digital stuff into it.

Are there any other universities or departments that you work with and in Denmark?

We work with all universities in Denmark. Despite having employees who are not computer scientists, we are clearly a tech company. So, we have a lot of computer scientists and computer engineers. Obviously, we work more closely with the computer science department and engineering departments at universities. But we also work with economics and social science departments.

Do you have any success stories that you can share with us?

It would be really nice if I could just say, “Yes, I have this marvelous story, and there are so many of them!”. So, my favourite story right now is about natural language processing. The state-of-the-art in natural language processing is actually pretty good. Most standard tests that you can think of, like transcribing, or speaking text, or whatever you could think of – as long as it does not involve too much proper understanding – in general, modern methods will beat humans.

For obvious reasons, most of this research is done on the English language, and I represent a very small language group. On a good day, we are probably seven million who speak it. So, despite state-of-the-art being really high, the actual state of the industry in Denmark was non-existent more or less a few years ago. So we’ve been working quite a lot with taking state-of-the-art technology, massaging it, and making it accessible for companies around the world. But for obvious reasons, mostly Danish companies.

Currently many companies are basically doing the same thing, which I find completely idiotic if we can do it together. So, we are now running a Danish natural language processing repository, which is open source and accessible for all companies.

I think that’s a huge success story. If you want to do something with language, it involves a lot of work. Currently many companies are basically doing the same thing, which I find completely idiotic if we can do it together. So, we are now running a Danish natural language processing repository, which is open source and accessible for all companies. So, we raised the bar for small companies that want to do something cool. I think that’s currently my favourite.

How important is collaboration for you?

It’s everything! To a certain degree we kind of look like a consultancy company. I used to run one, so I know how they work. My favourite business model for a consultancy company is going into a company, building something, and saying, “You now have a thing, tada! Here’s a bill, call me when it doesn’t work, because I can send you a new bill!”. It’s a marvelous model right? We don’t really like that, and that’s down to this whole idea that companies are supposed to be better.

So, we do much more collaborative types of projects. It’s a success in itself. This is going to sound silly, but it’s actually a success if the customer doesn’t call back. Because that means that we have solved the problem, and we enabled them to keep on working with it, and that requires a much higher degree of collaboration than the classic, “Here are a bunch of consultants, I’ll see you in August”. So collaboration is at the core, both across companies and across disciplines.

What do you think is the value of interdisciplinarity, and also the value that you believe social scientists can bring to some of the projects that you’re working on?

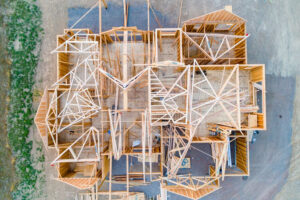

It’s very rare, if ever, that you will just call one guy and he will build a house for you. There’s a reason you employ these different people with different competencies, and that maps directly onto my world.

I think it’s pretty easy to talk about the value. It’s kind of like building a house. You’re going to call a plumber, and an electrician, etc. The people that you need to build a house. It’s very rare, if ever, that you will just call one guy and he will build a house for you. There’s a reason you employ these different people with different competencies, and that maps directly onto my world. I estimate that we have probably 80% natural sciences [at the Institute]. The rest are anthropologists, people with a background in economics, I think we have an architect…

It basically boils down to the fact that I like building stuff. Unfortunately, these things tend to be used by humans, and humans are difficult. So, at the bare minimum we need somebody who can understand these weird humans! Or it‘s just going to be another black box in the corner going bing.

It changes the computer from a very passive instrument…we are slowly moving into these mixed initiative systems where my computer might actually ask me to do something!

I think it’s particularly interesting in my area. The current state of AI is that we can do many things that just a few years ago we would hire people to do. So that could be pseudo–automated customer service, or we talked about churn prediction. All of these things you would normally hire people to do just a handful of years ago, we can now do with the computer. It changes the computer from a very passive instrument.

Computers are basically just hammers. They are on your desk, and waiting for us to press something, and when I press something the computer goes whee. Then it waits for me to press something else. But we are slowly moving into these mixed initiative systems where my computer might actually ask me to do something.

So it’s a co-operation now, and that moves IT projects even further into being an organisational project. I usually tell people if they’re going to do an AI project and are looking at it as an IT project, it’s far better to just give me half the money, keep the other half, and don’t do anything. Because we both made money and it’s not a disaster!

We can’t do these projects without somebody being able to mediate human to human, and human to machine. We can’t do that unless we’re interdisciplinary.

These projects are organisational development projects, and they are going to change your organisation. You’re getting a new colleague, that just happens to be made out of silicon. So, we can’t do these projects without somebody actually being able to mediate human to human, and human to machine. We can’t do that unless we’re interdisciplinary. So, anthropologists play a pretty important role now.

Do you think your view on interdisciplinarity is the norm in Denmark?

That’s actually a pretty interesting question. I think that if you ask people like me, I would probably say that’s close to the norm. I think if you ask within more classic companies, I’m not convinced that’s the norm. We still build a lot of crappy IT systems, for example.

We have a very large and strong public sector in Denmark, and obviously they buy a lot of IT. And we managed to put together very complicated systems for buying things in the public sector. They spend two years writing a requirement. Then after two years it’s obsolete. And then, you’re going to build a system in two years, and that’s also going to become obsolete.

Then you end up with a bill with far too many zeros, and it’s not going to work. All of this could have been done better both process– and people–wise. And in that sector, purchasing the old classic way, I‘m not sure that that view pertains to this area as much. But I think it’s getting there. I see more and more anthropologists in classic companies, and also the public sector. So, they’re probably getting there.

The push to make things happen more quickly can lead to the sidelining of social scientists and anthropologists. Thinking about the Agile model, how do we strike the balance between adequate research, and speed of delivery?

Some things just take time, and are difficult. And even though I like the idea of building stuff fast to get it out there and to see if it works, difficult problems often require thinking before doing.

I always liked the Agile way, not the Agile with the capital A, because that’s the most dogmatic process that ever existed. But Agile as a term, that is probably the most sensible way of doing things. Agile processes need more flexibility than the consultancy0driven Agile dogma allows. We run a risk – I’ll probably get yelled at by my Agile colleagues – but we run a risk of trying to chop everything into too small pieces.

Some things just take time, and are difficult. And even though I like the idea of building stuff fast to get it out there and to see if it works, difficult problems often require thinking before doing. There seems to be a tendency, if we are very Agile, that we start by doing stuff and then thinking afterwards. That’s the wrong way round. So, I think there are good and bad things about this. But generally, I think moving into AI, it is an experimental science, if it’s a science at all.

How would you define responsible AI?

So, to be annoying, it doesn’t exist! AI can’t be responsible, because it’s amoral. It’s the usage which we can debate whether it’s responsible or not. It might sound like semantics, but it isn’t really. We have a tendency to say, “Your AI has to be responsible”. It can’t, how should it? It doesn’t know anything!

I’m not sure that it makes sense to talk about responsible usage of AI. I think it basically boils down to being responsible, period.

So, there’s clearly responsible usage of these things. Again, I’m not sure that it makes sense to talk about responsible usage of AI. I think it basically boils down to being responsible, period. Because there are clearly cases where it’s beneficial to have a decision made by a human. There are clearly cases where it’s beneficial to have a decision made by a nonhuman.

At the end of the day, it shouldn’t matter who makes a decision. The same level of requirements should be met with respect to being responsible or ethical. Sometimes I think we are diverging the healthy debate, because we stamp this AI thing on it. And we tend to have an implicit idea that humans are the gold standard.

The preschool example is, a few years back in the US, where they – probably still do – use a machine learning system to predict how many years in prison you should have. There was a lot of debate about the AI being unethical or irresponsible. But it didn’t come up with that solution. It was taught that solution by chewing through thousands and thousands of verdicts in the court. It learned how we treat people in the courtroom. That might be an unethical way of treating people, but it’s just mirroring our behavior.

I think we sometimes tend to use it as an excuse. Because we can point at the AI system going, “That’s misbehaving”. It kind of closes up the debate somehow.

I like the quote from, I think it’s Dennet, who said that he loves AI because it keeps philosophers sober. And I think it’s brilliant, because many of these things we do with pretty simple AI techniques today are basically just a mirror we’ve put up and say, “I didn’t come up with this, I‘m just doing what I was taught”. So, if you don’t like the responsibility or the ethics, the problem is over there. And I think we sometimes use it, perhaps not necessarily consciously, but we sometimes tend to use it as an excuse. Because we can point at the AI system going, “That’s misbehaving”. It kind of closes up the debate somehow.

I can’t think of a single example of irresponsible or unethical AI which is not just an automisation of stuff we’re doing as humans anyway. And since I am in complaining mode, I think that we also tend to mix up the idiotic things we did by allowing huge monster companies to monopolise the digital world.

That’s an idiotic thing. But we mix that up with the AI discussion. Clearly they are using AI to further their goals, but it is a mixture of idiotic policies and technology. I think we should keep apart, because there’s plenty to discuss about these monster companies, but there’s also plenty to discuss about employing technology in any domain. By mixing it up, we tend to end up pointing at the wrong direction.

“I’ll now be building an AI system, that might contain humans or not, for a specific purpose. And I’m going to be doing it in a responsible, ethical, way”. That’s an interesting debate.

It would be much more interesting if we took away the AI term, and just talked about how we can be responsible.

My argument would be that I think we should put AI into the courtrooms. Because if we don’t like the way that we are sentencing people, we can pull this AI software apart, and we can fiddle around in the engine room, and put it back together, until we are happy with the result. We can’t do that with judges.

First of all, it’s a mess pulling them apart; and secondly, we are not allowed to do it! It doesn’t really work that way. Humans are strange, and we can’t fix that type of problem. So I think that there are many examples where the only responsible thing to do is bring in an AI or a piece of software. It could be just statistics. It would be much more interesting if we took away the AI term, and just talked about how we can be responsible.

What’s the commercial imperative for companies to design and develop ethically?

That’s the interesting part, that currently there isn’t a sustainable business model for doing things the proper way. There’s only a sustainable business model for doing things improperly. That’s the sad truth. There’s an argument to be made, and perhaps in particular within the EU, because there’s a lot of work on this trustworthy, human-centric, or whatever term is fashionable, AI.

There might actually be a business model on the horizon, where if you can stand up and say, “I’m treating your data in a sensible manner”, that that might actually give you a commercial edge.

And of course, you can say we can properly regulate some of it. I think there’s room for some sort of regulation somewhere. But at the end of the day, regulation is basically just saying that we lost it. So there‘s another argument to be made I think that’s more interesting. There is a growing concern among consumers with respect to data ethics.

There might actually be a business model on the horizon, where if you can stand up and say, “I’m treating your data in a sensible manner”, that that might actually give you a commercial edge. I think we’ve seen a few examples where we’re getting into that area. We’re not completely there yet but there’s an opening. Which I personally prefer over regulation as a general rule, because regulation is far too difficult.

Would you say that this new commercial model is taking a long time to gain traction?

Yes. That’s because we never understood as a consumer that we are paying for services. Because we’re used to being paid in some sort of currency. We’ve been taught that consuming a digital service does not require you to put money on the table. So, it’s in a sense free. But it’s not, because you’ve been paying with something as simple as bandwidth, and you’ve been paying with data.

Take the value of Facebook, and divide it by its number of users, and you know your worth!

So, you are actually paying for the service, is just another currency. I think it’s very difficult to understand because it’s not tangible. But we have always been paying for these services. I think one solution is to start teaching people that it’s a currency and it has some sort of value. You can calculate the value. You can just take the value of Facebook, and divide it by its number of users, and you know your worth! But until we as a consumer understand that we are actually paying, just in the slightly different currency, I think it’s a slow–moving vehicle.

How can businesses design and develop AI that doesn’t negatively impact people in society?

To some degree it basically just boils down to doing things properly. It’s like the guy building your house. You can have a very good carpenter, or you can have a very crap carpenter. If we just strive to become good carpenters – I mean, that’s very easy to say – but that’s what would solve the problem. Just do things properly.

So clearly there’s a lack of competence, and lack of numbers. There aren’t that many people that are highly specialised in this area for obvious reasons. There’s a 25-year production on a master’s in computer science. So, it takes a while to get these people out there.

I think there‘s a huge issue in competence amongst people purchasing this technology. It’s smoke and mirrors you’re buying, and it’s a difficult matter to get into. But I always return to my first love, the scientific method. If we stick to that we are on the right path, because it’s a matter of asking ourselves, what am I trying to do? Do I know what I need to do this? Do I have a proper testing method? Can I test the result? Can I test the robustness? All of these.

If consumers are paying you money because you do it in the proper way, and not paying the other guys because they don’t, that’s your imperative to do it.

It’s a mixture of classic scientific method and proper engineering. And if we just do that, then the whole responsible, ethical thing is at some point going to be a natural part of this. Because at the end of the day, what drives this is whether or not you make a surplus as a company. So, if consumers are paying you money because you do it in the proper way, and not paying the other guys because they don’t, that’s your imperative to do it.

And then of course, you can be hit by some sort of regulation, as a bonus. But at the end of the day that’s basically what runs it. I think we are in general as companies, we are probably under-estimating unforeseen consequences of what we’re building today. And that doesn’t even have to be responsible and ethical. Are we actually sure that when we do these models, we can identify that they’re drifting? Can we identify that the world is drifting? There’s all the difficult parts of building AI or machine learning systems, that as a general rule, we are probably not sufficiently good at yet.

I would also argue that anthropologists know too little about technology. I think more tech in anthropology education would be a very good thing.

There’s actually a thing to be said about the anthropologists here. As a generalisation, I would also argue that anthropologists know too little about technology. I think more tech in anthropology education would be a very good thing. Traditionally, I think anthropologists have been most comfortable doing observations, and then saying, “I observed XYZ”. They have been really not happy about then saying, “So if you doing XYZ, and you want to achieve something, you should do this”. But that’s getting better.

I think so in a sense, I could argue that anthropology is almost becoming grown-up now. It’s not just observing anymore. It’s actually also saying something about what you should do. And combining this very nice trend with a bit more tech, I think then we are on the happy trail.

I think the best thing I do when sitting here in my office is looking out into the hallway, towards the coffeemaker. And there’s a guy with a PhD in some very obscure computer science thing, and there’s a guy with a PhD in some very obscure anthropologist thing. And they are chatting to each other at the coffeemaker. And they appear to be understanding each other. I mean that’s a happy day. And I think that’s what we need. More tech for the anthropologists, and more anthropology for the tech people.

You can find out more about Anders’s work here.

You can listen to the full interview here.